#FP32 VS FP64 PROFESSIONAL#

The exceptions to this are the GTX Titan cards which blur the lines between the consumer GTX series and the professional Tesla/Quadro cards. The performance generally ranges between 1:24 (Kepler) and 1:32 (Maxwell). Also the Stylegan project GitHub - NVlabs/stylegan: StyleGAN - Official TensorFlow Implementation uses NVIDIA DGX-1 with 8 Tesla V100 16G(Fp32=15TFLOPS) to train dataset of high-res 1024*1024 images, I'm getting a bit uncertain if my specific tasks would require FP64 since my dataset is also high-res images. This paper proposes a method for implementing dense matrix multiplication on FP64 (DGEMM) and FP32 (SGEMM) using Tensor Cores on NVIDIAs graphics. NVIDIA’s GTX series are known for their great FP32 performance but are very poor in their FP64 performance. But The Best GPUs for Deep Learning in 2020 - An In-depth Analysis is suggesting A100 outperforms A6000 ~50% in DL.

#FP32 VS FP64 64 BIT#

64 bit is only marginally better than 32 bit as very small gradient values will also be propagated to the very earlier layers. The choice is made as it helps in 2 causes: Lesser memory requirements. They all meet my memory requirement, however A100's FP32 is half the other two although with impressive FP64.īased on my findings, we don't really need FP64 unless it's for certain medical applications. The most popular deep learning library TensorFlow by default uses 32 bit floating point precision. I need at least 80G of VRAM with the potential to add more in the future, but I'm a bit struggling with gpu options.Īssume power consumption wouldn't be a problem, the gpus I'm comparing are A100 80G PCIe*1 vs. I compiled on a single table the values I found from various articles and reviews over the web.Hello, I'm currently looking for a workstation for deep learning in computer vision tasks- image classification, depth prediction, pose estimation. Half precision (also known as FP16) data compared to higher precision FP32 vs FP64 reduces memory usage of the neural network, allowing training and deployment of larger networks, and FP16 data transfers take less time than FP32 or FP64 transfers. indicate that in training V100 is 40 faster than P100 with FP32 and. Storing FP16 (half precision) data compared to higher precision FP32 or FP64 reduces memory usage of the neural network, allowing training and deployment. Always using FP64 would be ideal, but it is just too slow. Sadly, even FP32 is too small and sometimes FP64 is used. If FH could use FP16, Int8 or Int4, it would indeed speed up the simulation.

But The Best GPUs for Deep Learning in 2020 An In-depth Analysis is suggesting A100 outperforms A6000 50 in DL. Re: FP16, VS INT8 VS INT4 by JimboPalmer » Tue 3:40 am. Based on my findings, we don't really need FP64 unless it's for certain medical applications.

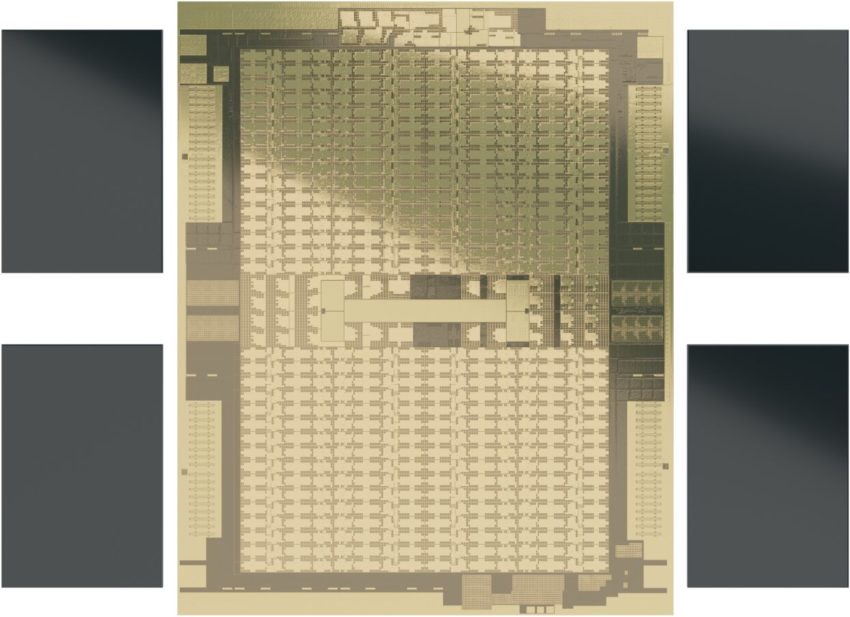

Like crazy similar, as you can see here: We never did know the size of the Level 0 caches on Volta, and we don’t know the size on the Ampere GPU, either. Here is the GFLOPS comparative table of recent AMD Radeon and NVIDIA GeForce GPUs in FP32 (single precision floating point) and FP64 (double precision floating point). In this blog, we will introduce the NVIDIA Tesla Volta-based V100 GPU and evaluate. They all meet my memory requirement, however A100's FP32 is half the other two although with impressive FP64. Each SP has sixteen 32-bit integer units (INT32), sixteen 32-bit floating point units (FP32), and eight 64-bit (FP64) units.

0 kommentar(er)

0 kommentar(er)